-

What We Do

What we do

- Verticals

- Solutions

- Who We Are

With real IoT implementation taking place in different market verticals like smart-home, smart-office, etc., researchers are looking at various options in input mechanisms to improve user experiences. Amazon’s Alexa-controlled Echo speaker, Google Home, Apple’s Siri based HomeKit are products targeted to improve the user experience by taking voice based inputs. Voice enabled digital assistance services/devices are getting increasingly popular. The experience of using voice commands to control devices plays a role in the user experience.

In this blog we focuses on integration of Amazon Alexa based Echo speaker to IoT implementation to deliver next generation user experience. Amazon's Alexa based Echo speaker, now in its second generation and with several derivative versions available, continues to expand its music, smart-home, and digital-assistant abilities. It’s first a wireless speaker, but capable of much more. Using your own voice, you can play music, search the Web, create to-do and shopping lists, shop online, get instant weather reports, and control popular smart-home products—all while your smartphone stays in your pocket.

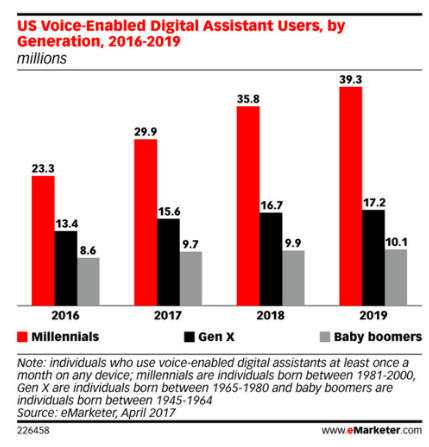

From the market trends, it’s becoming clear that voice interaction will soon become an expected offering as either an alternative or add-on feature to traditional visual interfaces. See the diagram below on the prediction on this market sector.

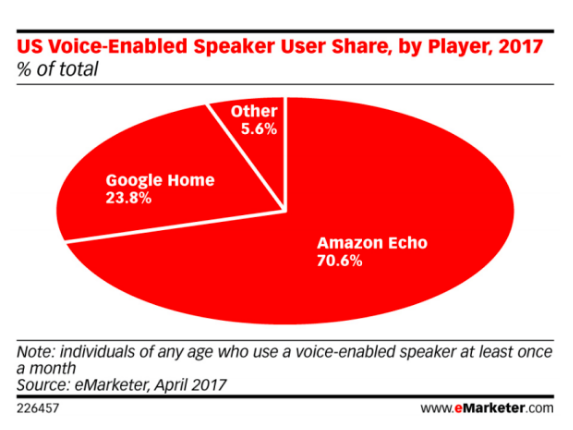

Alexa is an intelligent voice enabled personal assistant developed by Amazon which is currently leading this market sector.

The smart home device market is highly competent and many companies introducing new best featured smart home devices into the consumer market. Hence, providing additional features like voice enabled services assistance is a must for competing with other products.

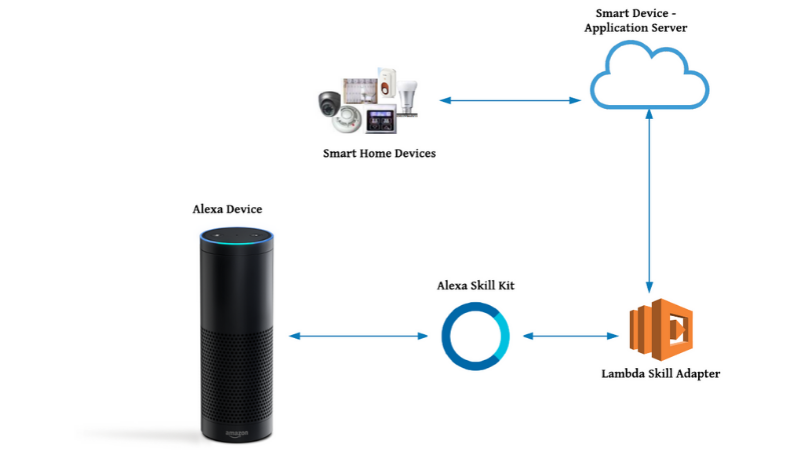

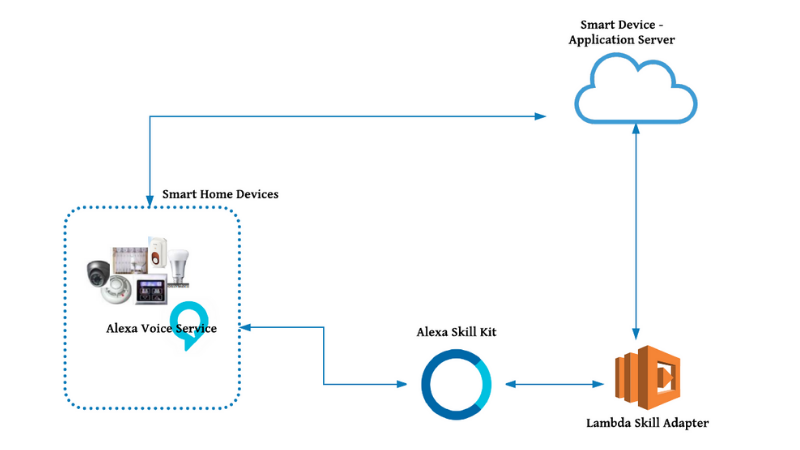

Alexa can be integrated with the smart device hardware or can be used Alexa as a separate service like using Alexa mobile app, Amazon Echo speaker, etc. The following section describe the architecture of both the implementation.

If the smart device development is completed, still we can make the device voice enabled using external Alexa applications. The high level architecture diagram is given below.

Amazon supports Alexa SDK on various operating systems like Linux, RaspberryPi etc. Hence, Smart devices can have an in built Alexa support. The high level architecture is given below.

The major components in the above architectures are:-

Alexa service will be invoked using “Alexa” keyword, followed by user’s utterances. These device continuously listen to all speech and monitoring for the wake word to be spoken, which is primarily set up as "Alexa". These devices have natural lifelike voices recognition technology through sophisticated natural language processing (NLP) algorithms. These devices have integrated with Alexa skill kit.

The Alexa skill analyzes the voice request extracting intent, with slots if any and passes all to the Lambda Function that invokes the right API service, extracts data and prepares the response to return to Alexa in order to provide voice response to the user.

Alexa skill service consists of two main components a Skill Adapter and a Skill Interface. The code is written in the skill adapter and user can configure the skill interface via Amazon's Alexa skill developer portal. The interaction between the code on the skill service and the configuration on the skill interface yields a working skill.

The skill service is where business logic is implemented which resides in the cloud and hosts code would receives JSON payloads from Alexa voice service. It determines what actions to take in response to a user speech. The skill service layer manages HTTP requests, user accounts information, processing sessions and database access for exam all this behavior which is a concern to the skill service.

The interface configuration is the second part of creating a skill where we specify the utterances which is responsible for processing the user's spoken words. The interaction model is used for resolving the spoken words to specific intent events. Skill interface is available in Alexa Developer Portal.

Amazon provide The Alexa Skills Kit (ASK) which is a collection of self-service APIs, tools, documentation, and code samples that makes it fast and easy for you to add skills to Alexa. The Alexa Skill kit triggers the Skill Adapter Lambda function where the business logic is written.

The Alexa Voice Service (AVS) is used to voice-enable smart devices with a microphone and speaker. Once integrated, the device will have access to the built in capabilities of Alexa. Voice service has audio signal processor which receives the voice and cleans it up for further processing. This data will splits into two sections; the first section is the wake word(“Alexa”); the second section is passed to audio input processor which handles audio input that is sent to AVS.

Smart Device Cloud server will interface with the smart devices. Cloud server will receive request from Alexa and it will forward these command to IoT devices.

The first step in building a new skill is to decide “what the skill will do”. The functionality of the skill determines how the skill integrates with the Alexa and what we need to build. The Alexa Skills Kit supports building different types of skills as listed below:

Smart home skill and custom skills are most useful for controlling smart devices. A custom skill can handle any kind of request, as long as there is proper interaction model and code to fulfill the request. However Smart Home skill is Skill API from Amazon to control smart home devices such as cameras, lights, locks, thermostats, and smart TVs which simplifies development since prebuilt voice interaction model is available.

Custom skills provide the best user experience since we can define all possible utterances.

|

Smart Home Skill |

Custom Skill |

|---|---|

|

Easy to use for customer |

Most flexible, but most complex to customers |

|

The Invocation name is not required for the skill. Customers can say “Alexa, turn on living room lights” |

Invocation name is required and customer need to remember invocation name. Customers can say “Alexa, ask Uber to request a ride” |

|

Built-in interaction model |

Design our own interaction model |

|

Limited devices can be linked to smart home skill, such as thermostat, lights etc. The custom sensors or devices such as valve , security devices etc. cannot be integrated. |

Any devices can be integrated. |

|

Skill must use AWS Lambda function |

Skill can be used with Lambda function or any other server |

|

This skill is for cloud connected smart home devices |

This skill can be used for any purposes. |

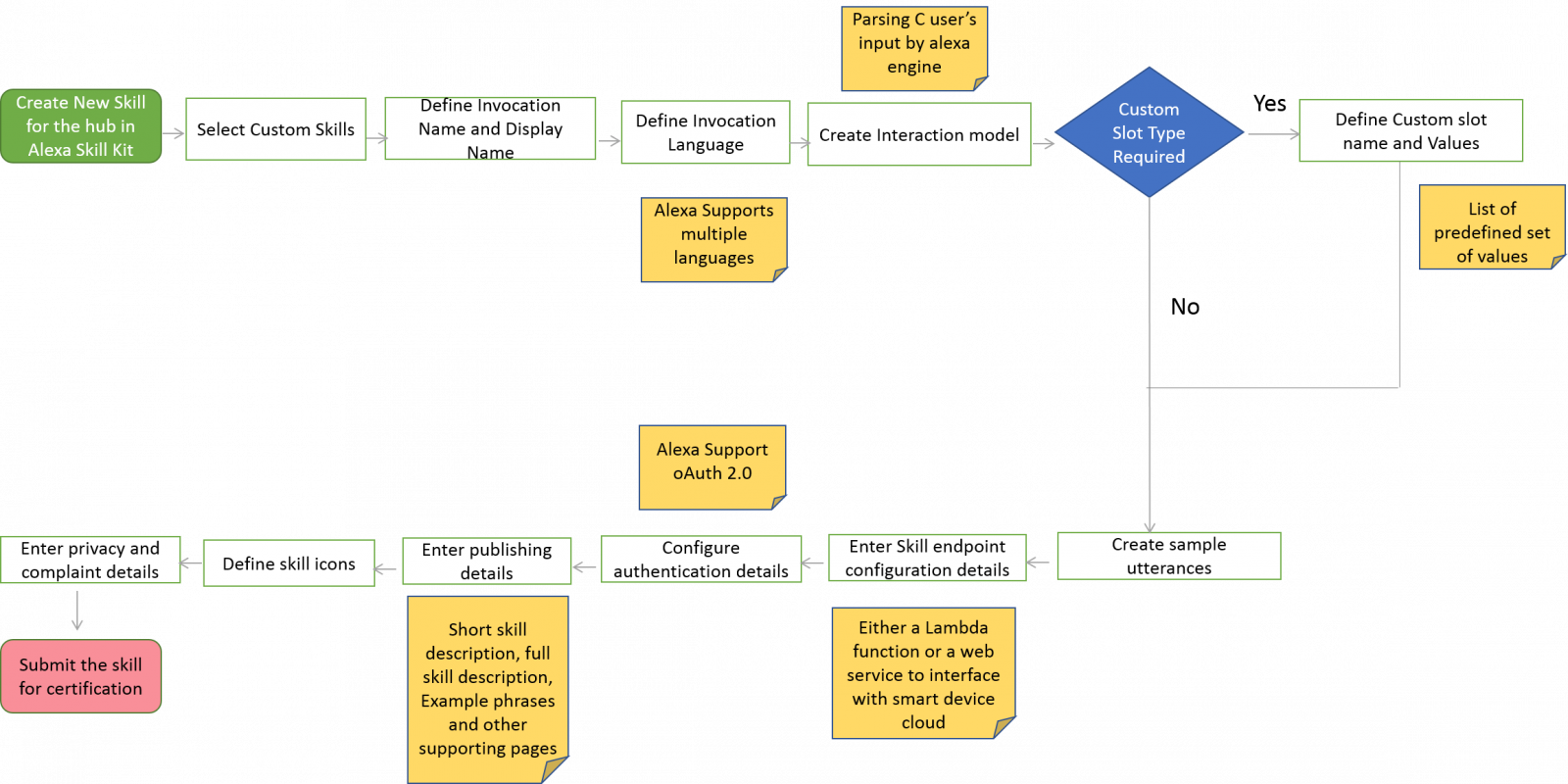

The process of integrating an Alexa on a hub which will control zWave based devices (Valves, thermostat etc) is given below. These can be divided into 2 main activities.

Create a Skill in Amazon Alexa and link the skill with Smart Device’s cloud

Once the skill is created, the hub users can add the new skill and link their hub on the Alexa device. Amazon will handle the voice conversion and invoke the correct interaction model for further processing.

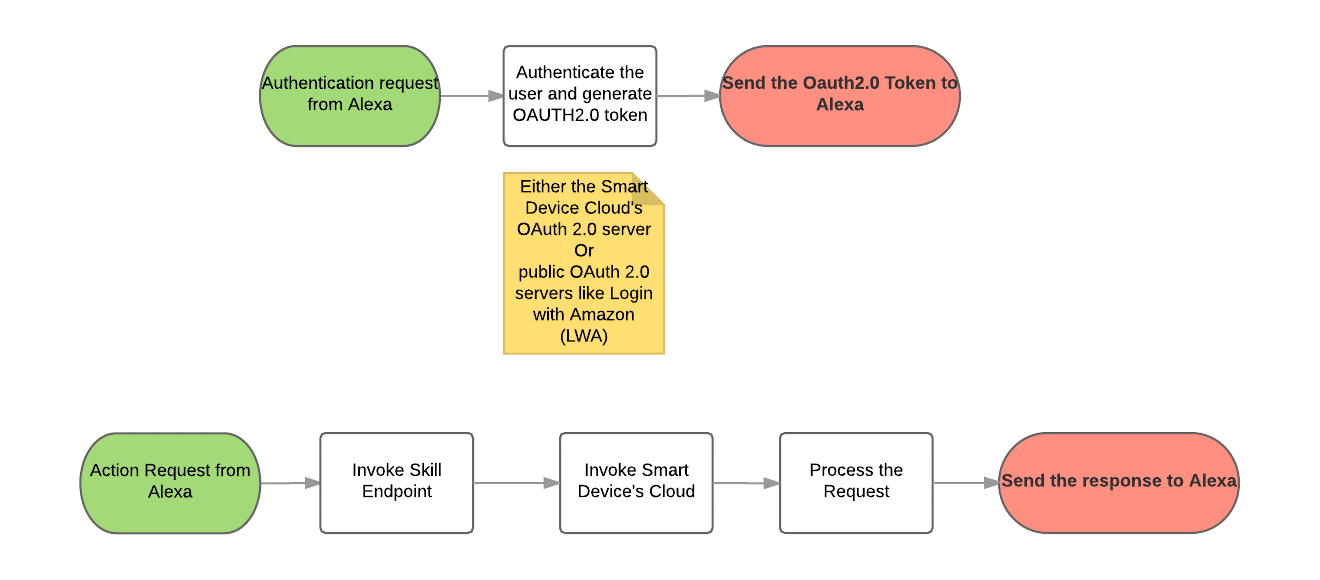

Enable the Smart Device Cloud for Alexa support

Alexa supports OAuth2.0 authentication for validating the Smart Device users. Hence, the Smart Device Cloud should support OAuth2.0. The Smart Device Cloud should also supports the operation invoked by the Alexa. The cloud server should respond proper response messages to Alexa for a good user experience.

Some of the best practises and tips for developing alexa skill is listed below:

Alexa is the most popular voice enabled assistance. But, it's not the only one. Gadgeon can integrate more services like Google Home, Apple Homekit, IFTTT etc. to make your smart device smarter. Gadgeon - IoT software development company, with years of experience in Home Automation and IoT, can help to navigate the challenges and provide the most appropriate user experience for voiced enabled solutions.