-

What We Do

What we do

- Verticals

- Solutions

- Who We Are

Most banks already have the APIs they need. The challenge for building AI capability isn’t shortage of integrations - it’s architecture. Point-to-point integrations move data but destroy context. Event-driven architecture turns existing APIs into a shared business language that AI can actually use.

In my last post, I made the case that AI isn't failing in banking because of AI—it's failing because most institutions lack the data foundation that AI requires to reason effectively. Systems don't understand each other. There's no shared semantic model. No enterprise data layer.

.jpg)

The response to that post was striking. Dozens of executives reached out with some version of the same message: "You just described our situation exactly. But what do we actually do about it?"

That's what this post is about.

Because here's what I've learned after years of helping financial institutions untangle their technology: the raw materials for solving this problem are already sitting inside your organization. You don't need to rip and replace. You don't need a three-year transformation program. You don't need to consolidate onto a single vendor's platform.

You need to use what you already have—differently.

Let me ask you a question: How many of your core systems expose APIs?

If you're running a modern loan origination system, the answer is almost certainly yes. Same for your CRM, your digital banking platform, your document management system, your marketing automation tools, your servicing platform.

Over the past decade, the SaaS vendors serving financial services have invested heavily in API capabilities. They had to—their customers demanded it. The era of completely closed, proprietary systems is largely behind us.

So when I look at a typical mid-sized bank or IMB running 15-25 platforms, I don't see a mess that needs to be replaced. I see a

collection of systems that are already capable of sharing information programmatically.

The APIs exist. The capabilities are there.

The problem is how we're using them.

Here's the pattern I see at almost every institution I work with:

At some point, someone needed System A to talk to System B. Maybe it was pushing loan data from the LOS to the servicing platform. Maybe it was syncing contacts between the CRM and marketing automation. Maybe it was pulling pricing data into the POS.

So a developer built an integration. System A calls System B's API, passes some data, and gets a response. Problem solved.

Then someone needed System A to talk to System C. Another integration.

Then System B needed data from System D. Another integration.

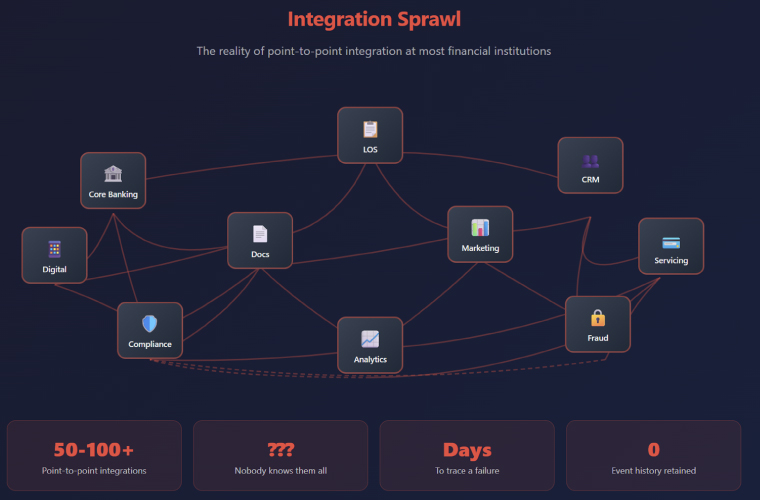

Fast forward a few years, and you have something that looks like this:

Except in reality, it's far messier than any diagram can capture. There are 40, 60, 80 point-to-point connections. Some were built by employees who left years ago. Some are documented; many aren't. Some are running on infrastructure that nobody wants to touch because it "just works" and nobody fully understands why.

This is integration sprawl. And it has some characteristics that make it deeply problematic:

It's brittle. When any system changes—an API version update, a schema modification, a vendor migration—multiple integrations can break. And tracing the failure through the dependency chain is detective work.

It's synchronous. Most point-to-point integrations are request-response. System A asks System B for something and waits for an answer. If System B is slow or down, System A is affected. Failures cascade.

It's vendor-specific. Each integration speaks the native language of the systems it connects. There's no abstraction, no common vocabulary. The integration between your LOS and servicing platform "knows" about loans in a completely different way than the integration between your CRM and marketing platform "knows" about customers.

It's impossible to reason about holistically. Nobody—and I mean nobody—has a complete mental model of how data flows through the organization. When an executive asks "what happens when a customer misses a payment?" the honest answer is often "it depends, and we'd have to trace through several systems to tell you."

And critically: it doesn't create data. It just moves data. All those integrations are copying information between systems, but they're not creating any durable, unified record of what's happening in your business. The data exists transiently, in flight, and then it's gone.

In my previous post, I argued that AI needs to understand relationships between things—customers, accounts, loans, events, interactions. It needs context.

Integration sprawl doesn't provide that. It provides plumbing.

Think about what your point-to-point integrations actually accomplish:

What they don't do is create an enterprise-wide understanding of what just happened.

When a loan application is submitted, that event ripples through your organization. It means something to originations. It means something to compliance. It means something to marketing. It means something to the branch that referred the customer.

But in a point-to-point world, there's no "loan application submitted" event that all those stakeholders can observe and respond to. There's just a series of isolated data transfers between pairs of systems, each blind to the others.

This is why AI initiatives struggle. They need access to the event stream of your business—the sequence of meaningful things that happen, in context, over time. Integration sprawl buries those events inside synchronous API calls that leave no trace.

Let me introduce a concept that changed how I think about system integration:

What if instead of systems talking to each other, they all talked to a shared "message bus"—and other systems listened?

Here's what I mean:

In the point-to-point model, when something significant happens in System A, System A is responsible for knowing who cares about it and calling each of them directly. System A becomes tightly coupled to Systems B, C, D, and E. If a new System F needs to know about these events, someone has to modify System A.

In an event-driven model, when something significant happens in System A, System A simply announces it to the message bus: "Hey, a loan application was just submitted. Here are the relevant details."

System A doesn't know or care who's listening. It just publishes the event.

Meanwhile, Systems B, C, D, E, and F have all subscribed to events they care about. When the "loan application submitted" event appears on the bus, each of them receives it and does whatever they need to do.

This creates something fundamentally different:

Decoupling. Systems no longer need to know about each other. They just need to know how to publish and subscribe to business events. When System F comes along, it simply subscribes to the events it cares about—no changes required to any existing system.

Asynchronous operation. Events are published and delivered reliably, but publishers don't wait for subscribers to process them. If System C is slow or temporarily down, the events queue up and get delivered when it's ready. Failures don't cascade.

Real-time visibility. The message bus becomes a living record of what's happening across your enterprise, right now. You can observe the event stream and understand the state of your business in ways that were never possible with point-to-point integration.

And here's the key for AI: the event stream persists. Unlike synchronous API calls that happen and vanish, events can be stored, indexed, and analyzed. They become the raw material for understanding your business over time.

I want to be specific about what flows through this message bus, because it's not just "data."

It's business events—meaningful things that happen, expressed in a vocabulary that makes sense to your organization.

Here are examples of the kinds of events a bank or mortgage lender might publish:

Notice what these have in common: they're expressed in business terms, not system terms.

A "Document Received" event doesn't care which system captured the document. It doesn't expose the internal schema of your document management platform. It simply says: "This document, of this type, for this loan, was received at this time."

This abstraction is powerful. It means that the consumers of these events—whether they're other operational systems, analytics platforms, or AI applications—don't need to understand the internals of the producing system. They just need to understand the business vocabulary.

You're not integrating systems anymore. You're creating a shared language for your enterprise.

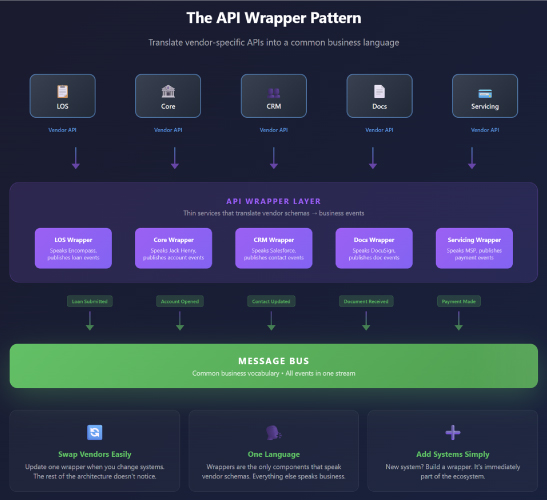

"But wait," you might be thinking. "My systems don't publish events to a message bus. They expose APIs."

Correct. And this is where your existing APIs become the asset.

The pattern is straightforward:

The wrappers are the only components that need to understand vendor-specific APIs. Everything else in your architecture just speaks the common event language.

When a vendor updates their API? You update one wrapper. When you swap out one platform for another? You update one wrapper, and the rest of the architecture doesn't notice. When you add a new system that needs to participate? You build a wrapper for it, and it's immediately part of the ecosystem.

This is how you turn integration sprawl into integration architecture.

I don't want this to sound theoretical. Let me ground it in practical outcomes:

I want to address an objection I hear frequently: "This sounds like a massive infrastructure project. We can't afford a multi-year transformation."

I understand that concern. I've seen too many institutions get burned by ambitious platform consolidation efforts that consumed years and budgets without delivering value.

But event-driven integration is fundamentally different:

The institutions I've seen succeed with this approach treat it as a program, not a project. They establish the pattern, build the core infrastructure, then systematically migrate integration responsibility from point-to-point spaghetti to the event-driven architecture over time.

Eighteen months in, they have dramatically better visibility into their operations, more resilient integrations, and—critically—the data foundation to pursue AI initiatives that actually work.

Here's what I'd ask you to consider:

Your systems already expose APIs. That means they're already capable of sharing information. The capability exists.

The question is whether you're going to continue using that capability to build more point-to-point connections—adding to the sprawl, increasing the brittleness, burying business events in synchronous calls that leave no trace.

Or whether you're going to use those same APIs to feed a shared event stream that creates visibility, enables flexibility, and builds the foundation for everything you want to do with AI.

The raw materials are the same. The architecture determines what you can build with them.

You don't need fewer systems. You need them to speak a common language.

In my final post in this series, I'll show where this leads: from message bus to data lakehouse to AI-ready data tier. We'll look at how the event stream becomes the feed for your analytical and AI capabilities—and what it takes to get from "events flowing" to "AI reasoning."

Because the message bus isn't the destination. It's the highway that makes the destination reachable.